Time Series forecasting using Deep learning¶

Placeholder: To write in the future. Deadline before end of deep learning module

import pandas as pd

import matplotlib.pyplot as plt

import seaborn as sns

import numpy as np

data = pd.read_csv('hyderabad-us consulate-air-quality.csv', parse_dates=['date'])

data = data.sort_values('date')

data.columns = ['date', 'pm25']

data = data.reset_index()

data

| index | date | pm25 | |

|---|---|---|---|

| 0 | 2295 | 2014-12-10 | 172 |

| 1 | 2296 | 2014-12-11 | 166 |

| 2 | 2297 | 2014-12-12 | 159 |

| 3 | 2298 | 2014-12-13 | 164 |

| 4 | 2299 | 2014-12-14 | 166 |

| ... | ... | ... | ... |

| 2309 | 0 | 2021-11-01 | 155 |

| 2310 | 1 | 2021-11-02 | 115 |

| 2311 | 2 | 2021-11-03 | 67 |

| 2312 | 3 | 2021-11-04 | 112 |

| 2313 | 4 | 2021-11-05 | 115 |

2314 rows × 3 columns

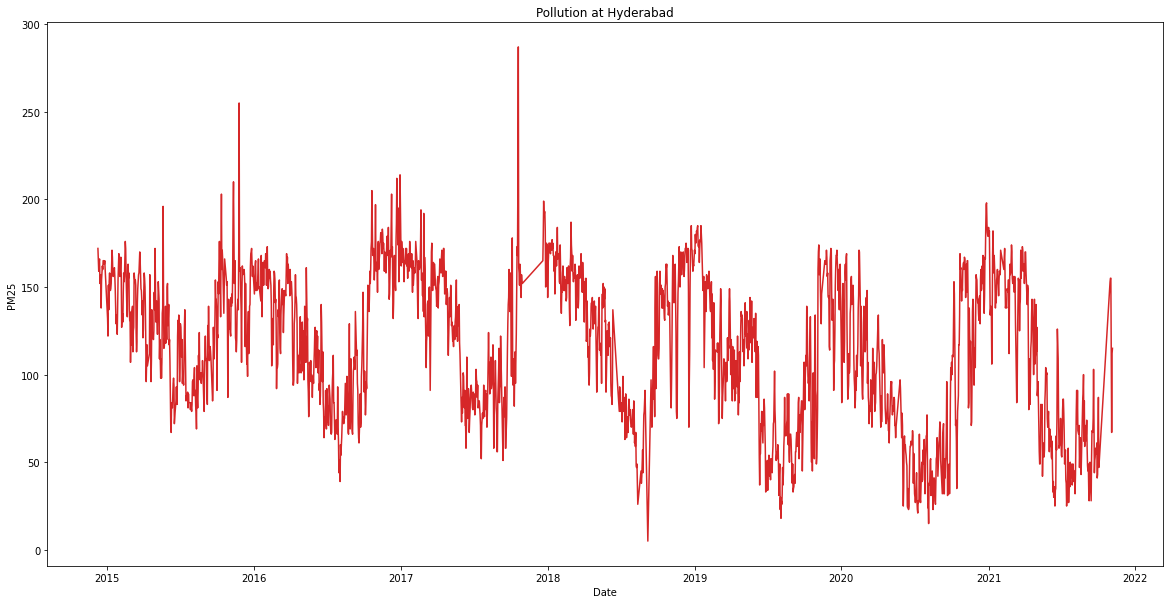

plt.figure(figsize=(20,10))

plt.plot(data.date, data.pm25, color='tab:red')

plt.gca().set(title='Pollution at Hyderabad', xlabel='Date', ylabel='PM25')

plt.show()

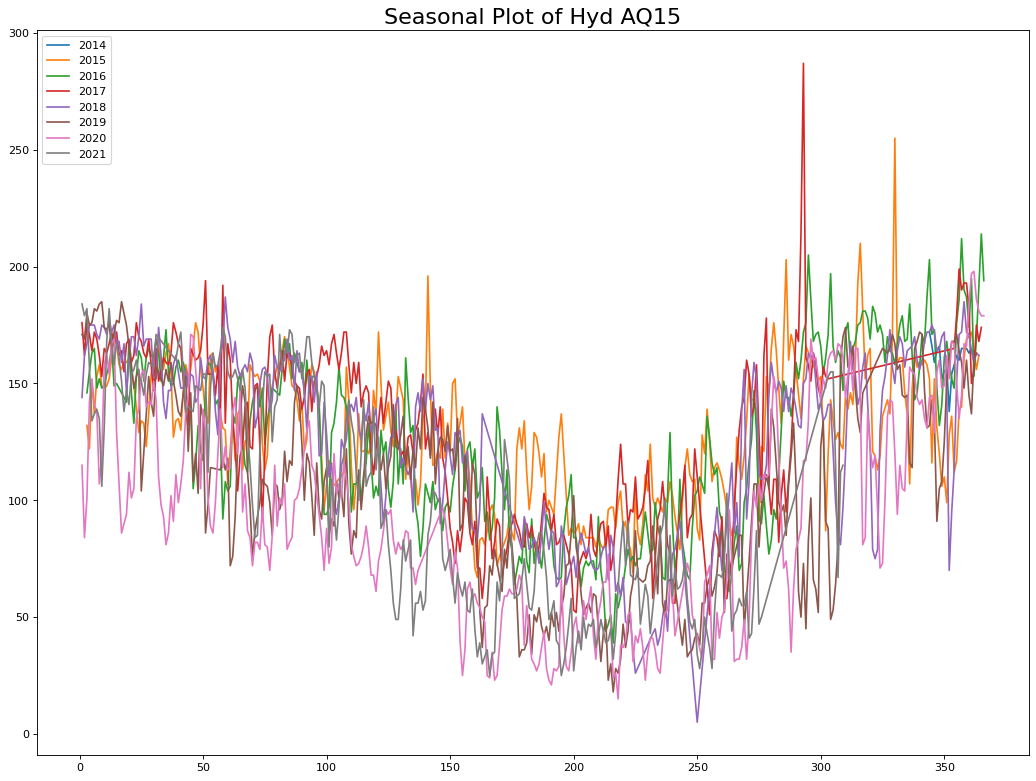

From this plot, we can see that pollution is higher during winter months while its lower during summer months. This effect is observed every year indicating a seasonal pattern in the data. There seems to be no increasing or decreasing trend in the data. This can be better visualised by decomposing the data into three components:

1. Seasonal component: The component that varies with season

2. Trend: Increasing or decreasing pattern

3. Random component: Remaining component that has no pattern

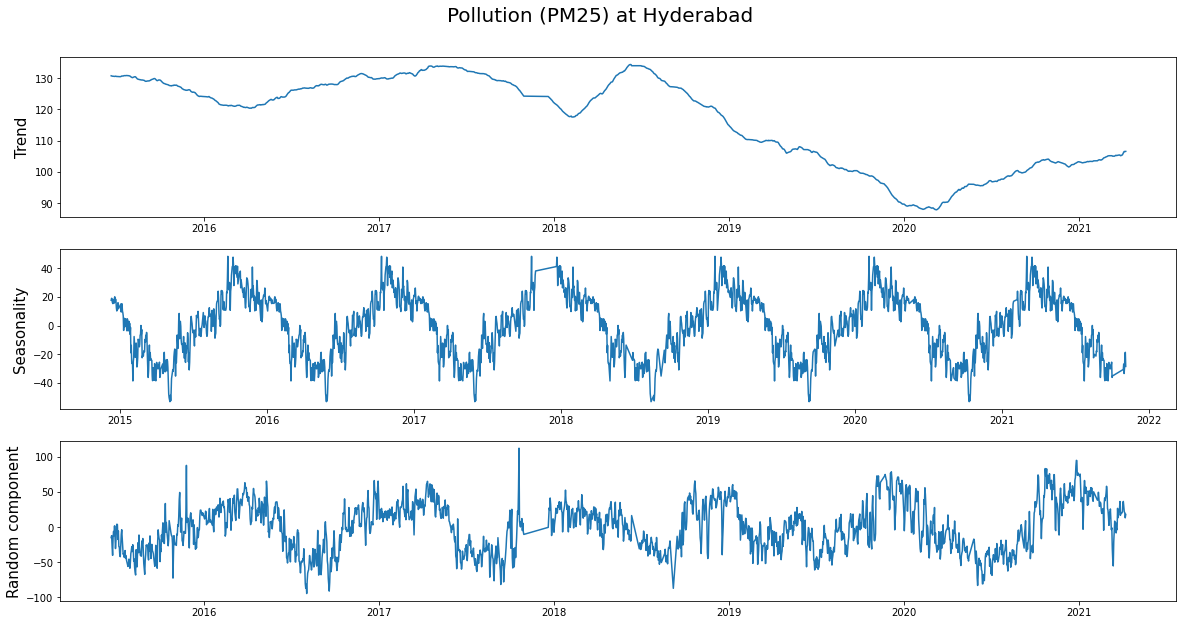

from statsmodels.tsa.seasonal import seasonal_decompose

result = seasonal_decompose(data.pm25, model='additive', period = 365)

fs, axs = plt.subplots(3, figsize=(20,10))

plt.suptitle('Pollution (PM25) at Hyderabad', fontsize = 20, y = 0.95)

axs[0].plot(data.date, result.trend)

axs[1].plot(data.date, result.seasonal)

axs[2].plot(data.date, result.resid)

axs[0].set_ylabel('Trend', fontsize=15)

axs[1].set_ylabel('Seasonality', fontsize=15)

axs[2].set_ylabel('Random component', fontsize=15)

plt.show()

Looking at the trend, we can see how the pollution decreased during 2020 (probably due to covid) and is slowly rising as the country is getting back to its feet.

data['year'] = data.date.dt.year

data['day'] = data.date.dt.dayofyear

plt.figure(figsize=(16,12), dpi= 80)

for i, y in enumerate(data.year.unique()):

plt.plot('day', 'pm25', data=data.loc[data.year==y, :], label = y)

plt.title("Seasonal Plot of Hyd AQ15", fontsize=20)

plt.legend(loc="upper left")

Simple Neural Net (Perceptron)¶

from keras.models import Sequential

from keras.layers import Dense, SimpleRNN, Lambda, LSTM

import tensorflow as tf

dataset = tf.data.Dataset.range(20)

dataset = dataset.window(15, shift=1, drop_remainder=True)

dataset = dataset.flat_map(lambda window: window.batch(15))

dataset = dataset.map(lambda window: (window[:-1], window[-1:]))

dataset = dataset.shuffle(buffer_size=3)

dataset = dataset.batch(1).prefetch(1)

for x,y in dataset:

print("x = ", x.numpy())

print("y = ", y.numpy())

x = [[ 1 2 3 4 5 6 7 8 9 10 11 12 13 14]]

y = [[15]]

x = [[ 3 4 5 6 7 8 9 10 11 12 13 14 15 16]]

y = [[17]]

x = [[ 2 3 4 5 6 7 8 9 10 11 12 13 14 15]]

y = [[16]]

x = [[ 5 6 7 8 9 10 11 12 13 14 15 16 17 18]]

y = [[19]]

x = [[ 0 1 2 3 4 5 6 7 8 9 10 11 12 13]]

y = [[14]]

x = [[ 4 5 6 7 8 9 10 11 12 13 14 15 16 17]]

y = [[18]]

def windowed_dataset(series, window_size, batch_size, shuffle_buffer):

dataset = tf.data.Dataset.from_tensor_slices(series)

dataset = dataset.window(window_size + 1, shift=1, drop_remainder=True)

dataset = dataset.flat_map(lambda window: window.batch(window_size + 1))

dataset = dataset.shuffle(shuffle_buffer).map(lambda window: (window[:-1], window[-1]))

dataset = dataset.batch(batch_size).prefetch(1)

return dataset

dataset = windowed_dataset(data.pm25, 14, 1, 3)

for x,y in dataset:

print("x = ", x.numpy())

print("y = ", y.numpy())

x = [[159 164 166 152 155 157 138 154 158 162 160 165 165 165]]

y = [163]

x = [[172 166 159 164 166 152 155 157 138 154 158 162 160 165]]

y = [165]

and so on

split_time = 2314-365*2

time_train = data.date[:split_time]

x_train = data.pm25[:split_time]

time_valid = data.date[split_time:]

x_valid = data.pm25[split_time:]

window_size = 14

batch_size = 1

shuffle_buffer_size = 2314-365*2

dataset = windowed_dataset(x_train, window_size, batch_size, shuffle_buffer_size)

l0 = tf.keras.layers.Dense(1, input_shape=[window_size])

model = tf.keras.models.Sequential([l0])

model.compile(loss="mse", optimizer=tf.keras.optimizers.SGD(learning_rate=1e-6, momentum=0.9), metrics=["mae"])

model.fit(dataset,epochs=100)

print("Layer weights {}".format(l0.get_weights()))

Epoch 1/100

1570/1570 [==============================] - 2s 764us/step - loss: 2513.6338 - mae: 36.4270

Epoch 2/100

1570/1570 [==============================] - 2s 1ms/step - loss: 2627.8843 - mae: 38.2651

Epoch 3/100

1570/1570 [==============================] - 1s 625us/step - loss: 4424.3721 - mae: 48.4370

Epoch 4/100

1570/1570 [==============================] - 1s 623us/step - loss: 5549.5840 - mae: 53.6082

Epoch 5/100

1570/1570 [==============================] - 1s 637us/step - loss: 2992.0337 - mae: 40.5288

Epoch 6/100

1570/1570 [==============================] - 1s 846us/step - loss: 3471.9915 - mae: 44.3860

Epoch 7/100

1570/1570 [==============================] - 3s 2ms/step - loss: 2531.1494 - mae: 34.7863

Epoch 8/100

1570/1570 [==============================] - 2s 1ms/step - loss: 1852.7971 - mae: 32.0643

Epoch 9/100

1570/1570 [==============================] - 1s 661us/step - loss: 1253.9712 - mae: 26.1902

Epoch 10/100

1570/1570 [==============================] - 1s 618us/step - loss: 2161.4375 - mae: 35.7045

Epoch 11/100

1570/1570 [==============================] - 1s 767us/step - loss: 1726.3955 - mae: 30.9938

Epoch 12/100

1570/1570 [==============================] - 2s 967us/step - loss: 1668.6731 - mae: 31.8759

Epoch 13/100

1570/1570 [==============================] - 1s 662us/step - loss: 2064.4319 - mae: 34.0762

Epoch 14/100

1570/1570 [==============================] - 1s 630us/step - loss: 2944.2009 - mae: 39.0366

Epoch 15/100

1570/1570 [==============================] - 2s 1ms/step - loss: 2634.4287 - mae: 37.2135

Epoch 16/100

1570/1570 [==============================] - 1s 616us/step - loss: 2405.7512 - mae: 35.9506

Epoch 17/100

1570/1570 [==============================] - 1s 617us/step - loss: 3331.7898 - mae: 42.2314

Epoch 18/100

1570/1570 [==============================] - 2s 949us/step - loss: 1733.8339 - mae: 30.9589

Epoch 19/100

1570/1570 [==============================] - 1s 734us/step - loss: 2795.8682 - mae: 38.8242

Epoch 20/100

1570/1570 [==============================] - 1s 872us/step - loss: 3353.5576 - mae: 41.7762

Epoch 21/100

1570/1570 [==============================] - 1s 808us/step - loss: 1974.5398 - mae: 33.4402

Epoch 22/100

1570/1570 [==============================] - 1s 873us/step - loss: 1905.5634 - mae: 32.1671

Epoch 23/100

1570/1570 [==============================] - 2s 837us/step - loss: 2250.3162 - mae: 34.8362

Epoch 24/100

1570/1570 [==============================] - 2s 1ms/step - loss: 2360.7571 - mae: 36.5247

Epoch 25/100

1570/1570 [==============================] - 1s 705us/step - loss: 2153.8865 - mae: 35.0538

Epoch 26/100

1570/1570 [==============================] - 2s 1ms/step - loss: 1548.2344 - mae: 29.6058

Epoch 27/100

1570/1570 [==============================] - 2s 942us/step - loss: 2877.3025 - mae: 39.9668

Epoch 28/100

1570/1570 [==============================] - 2s 1ms/step - loss: 1990.7844 - mae: 32.4618

Epoch 29/100

1570/1570 [==============================] - 2s 831us/step - loss: 1433.8342 - mae: 28.8914

Epoch 30/100

1570/1570 [==============================] - 1s 680us/step - loss: 2065.6316 - mae: 34.1275

Epoch 31/100

1570/1570 [==============================] - 2s 977us/step - loss: 2812.8083 - mae: 38.1380

Epoch 32/100

1570/1570 [==============================] - 2s 814us/step - loss: 1840.2141 - mae: 32.0926

Epoch 33/100

1570/1570 [==============================] - 2s 970us/step - loss: 1858.1345 - mae: 32.9561

Epoch 34/100

1570/1570 [==============================] - 1s 735us/step - loss: 2243.5950 - mae: 33.8061

Epoch 35/100

1570/1570 [==============================] - 1s 624us/step - loss: 1999.2378 - mae: 33.1796

Epoch 36/100

1570/1570 [==============================] - 1s 609us/step - loss: 2803.6067 - mae: 37.3053

Epoch 37/100

1570/1570 [==============================] - 2s 1ms/step - loss: 3408.6633 - mae: 43.0755

Epoch 38/100

1570/1570 [==============================] - 1s 610us/step - loss: 7617.3584 - mae: 53.5411

Epoch 39/100

1570/1570 [==============================] - 2s 1ms/step - loss: 3608.6226 - mae: 41.9082

Epoch 40/100

1570/1570 [==============================] - 1s 622us/step - loss: 1665.9635 - mae: 30.1138

Epoch 41/100

1570/1570 [==============================] - 1s 664us/step - loss: 2472.9622 - mae: 34.7668

Epoch 42/100

1570/1570 [==============================] - 2s 1ms/step - loss: 4391.3267 - mae: 44.2831

Epoch 43/100

1570/1570 [==============================] - 1s 658us/step - loss: 3736.1123 - mae: 43.4484

Epoch 44/100

1570/1570 [==============================] - 2s 1ms/step - loss: 2405.3022 - mae: 37.2903

Epoch 45/100

1570/1570 [==============================] - 1s 637us/step - loss: 2640.0657 - mae: 37.7513

Epoch 46/100

1570/1570 [==============================] - 1s 624us/step - loss: 1698.6646 - mae: 30.3015

Epoch 47/100

1570/1570 [==============================] - 2s 904us/step - loss: 2157.7537 - mae: 34.5056

Epoch 48/100

1570/1570 [==============================] - 2s 850us/step - loss: 2351.3657 - mae: 36.3811

Epoch 49/100

1570/1570 [==============================] - 2s 923us/step - loss: 3389.1948 - mae: 42.5566

Epoch 50/100

1570/1570 [==============================] - 1s 753us/step - loss: 2557.7690 - mae: 36.7457

Epoch 51/100

1570/1570 [==============================] - 1s 866us/step - loss: 2274.4500 - mae: 35.3559

Epoch 52/100

1570/1570 [==============================] - 2s 814us/step - loss: 1476.2098 - mae: 29.2134

Epoch 53/100

1570/1570 [==============================] - 2s 895us/step - loss: 3051.8118 - mae: 41.4144

Epoch 54/100

1570/1570 [==============================] - 2s 837us/step - loss: 3997.4836 - mae: 42.0719

Epoch 55/100

1570/1570 [==============================] - 1s 632us/step - loss: 2640.8892 - mae: 37.8363

Epoch 56/100

1570/1570 [==============================] - 1s 676us/step - loss: 2127.0208 - mae: 33.5877

Epoch 57/100

1570/1570 [==============================] - 2s 1ms/step - loss: 2155.6133 - mae: 35.2079

Epoch 58/100

1570/1570 [==============================] - 1s 628us/step - loss: 2110.5273 - mae: 35.1694

Epoch 59/100

1570/1570 [==============================] - 2s 1ms/step - loss: 3205.5698 - mae: 41.6258

Epoch 60/100

1570/1570 [==============================] - 1s 681us/step - loss: 2516.9368 - mae: 37.6260

Epoch 61/100

1570/1570 [==============================] - 2s 1ms/step - loss: 3302.0437 - mae: 40.9913

Epoch 62/100

1570/1570 [==============================] - 1s 621us/step - loss: 2135.1140 - mae: 34.7838

Epoch 63/100

1570/1570 [==============================] - 1s 631us/step - loss: 1920.6626 - mae: 32.1499

Epoch 64/100

1570/1570 [==============================] - 2s 1ms/step - loss: 2632.5876 - mae: 37.2169

Epoch 65/100

1570/1570 [==============================] - 1s 642us/step - loss: 1719.2740 - mae: 30.8670

Epoch 66/100

1570/1570 [==============================] - 1s 618us/step - loss: 2797.1809 - mae: 38.1031

Epoch 67/100

1570/1570 [==============================] - 1s 821us/step - loss: 2052.6494 - mae: 33.3538

Epoch 68/100

1570/1570 [==============================] - 2s 826us/step - loss: 2140.2795 - mae: 33.2761

Epoch 69/100

1570/1570 [==============================] - 1s 810us/step - loss: 2568.9514 - mae: 35.5570

Epoch 70/100

1570/1570 [==============================] - 2s 916us/step - loss: 1741.9554 - mae: 31.4823

Epoch 71/100

1570/1570 [==============================] - 1s 640us/step - loss: 3985.8354 - mae: 41.0881

Epoch 72/100

1570/1570 [==============================] - 1s 625us/step - loss: 1813.1409 - mae: 31.6561

Epoch 73/100

1570/1570 [==============================] - 2s 1ms/step - loss: 4502.4375 - mae: 45.8338

Epoch 74/100

1570/1570 [==============================] - 1s 621us/step - loss: 2171.1902 - mae: 34.1269

Epoch 75/100

1570/1570 [==============================] - 2s 1ms/step - loss: 1887.9999 - mae: 33.1114

Epoch 76/100

1570/1570 [==============================] - 1s 614us/step - loss: 1521.0691 - mae: 29.7172

Epoch 77/100

1570/1570 [==============================] - 2s 1ms/step - loss: 1950.2441 - mae: 32.6277

Epoch 78/100

1570/1570 [==============================] - 1s 617us/step - loss: 1778.5680 - mae: 30.9782

Epoch 79/100

1570/1570 [==============================] - 2s 1ms/step - loss: 1485.2815 - mae: 29.3998

Epoch 80/100

1570/1570 [==============================] - 1s 602us/step - loss: 1437.3258 - mae: 29.3189

Epoch 81/100

1570/1570 [==============================] - 2s 1ms/step - loss: 1997.8091 - mae: 34.4411

Epoch 82/100

1570/1570 [==============================] - 1s 587us/step - loss: 2212.0806 - mae: 34.5182

Epoch 83/100

1570/1570 [==============================] - 1s 587us/step - loss: 2208.1921 - mae: 33.5488

Epoch 84/100

1570/1570 [==============================] - 1s 590us/step - loss: 2176.0520 - mae: 35.4611

Epoch 85/100

1570/1570 [==============================] - 2s 1ms/step - loss: 1925.0211 - mae: 32.5826

Epoch 86/100

1570/1570 [==============================] - 1s 599us/step - loss: 3626.3096 - mae: 42.7268

Epoch 87/100

1570/1570 [==============================] - 2s 1ms/step - loss: 1902.9331 - mae: 33.2850

Epoch 88/100

1570/1570 [==============================] - 1s 588us/step - loss: 1713.0077 - mae: 31.0925

Epoch 89/100

1570/1570 [==============================] - 1s 584us/step - loss: 1231.0505 - mae: 26.7580

Epoch 90/100

1570/1570 [==============================] - 2s 902us/step - loss: 2903.7974 - mae: 39.2899

Epoch 91/100

1570/1570 [==============================] - 1s 704us/step - loss: 4899.5703 - mae: 49.9197

Epoch 92/100

1570/1570 [==============================] - 1s 720us/step - loss: 4719.0454 - mae: 45.7755

Epoch 93/100

1570/1570 [==============================] - 2s 915us/step - loss: 2016.2034 - mae: 33.3431

Epoch 94/100

1570/1570 [==============================] - 1s 746us/step - loss: 1934.7463 - mae: 32.4981

Epoch 95/100

1570/1570 [==============================] - 2s 930us/step - loss: 2149.3330 - mae: 34.3910

Epoch 96/100

1570/1570 [==============================] - 1s 583us/step - loss: 2434.7302 - mae: 36.1103

Epoch 97/100

1570/1570 [==============================] - 1s 585us/step - loss: 2350.9609 - mae: 35.0144

Epoch 98/100

1570/1570 [==============================] - 1s 612us/step - loss: 2069.1951 - mae: 33.9281

Epoch 99/100

1570/1570 [==============================] - 1s 596us/step - loss: 2446.0693 - mae: 36.7350

Epoch 100/100

1570/1570 [==============================] - 1s 626us/step - loss: 2440.7476 - mae: 33.8709

Layer weights [array([[-0.04500467],

[-0.05519092],

[ 0.09815453],

[-0.00597653],

[ 0.30430666],

[-0.00321147],

[ 0.07927534],

[ 0.00668947],

[ 0.04348003],

[ 0.11238142],

[-0.05560566],

[-0.29955685],

[ 0.01853935],

[ 0.76262796]], dtype=float32), array([0.7891302], dtype=float32)]

def plot_series(time, series, format="-", start=0, end=None):

plt.plot(time[start:end], series[start:end], format)

plt.xlabel("Time")

plt.ylabel("Value")

plt.grid(True)

forecast = []

for time in range(len(data.pm25) - window_size):

forecast.append(model.predict(np.array(data.pm25[time:time + window_size])[np.newaxis]))

forecast = forecast[split_time-window_size:]

results = np.array(forecast)[:, 0, 0]

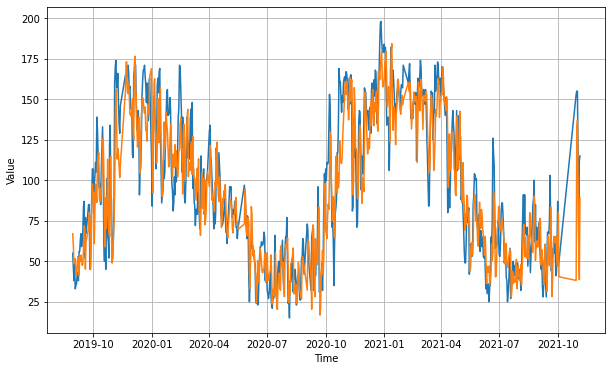

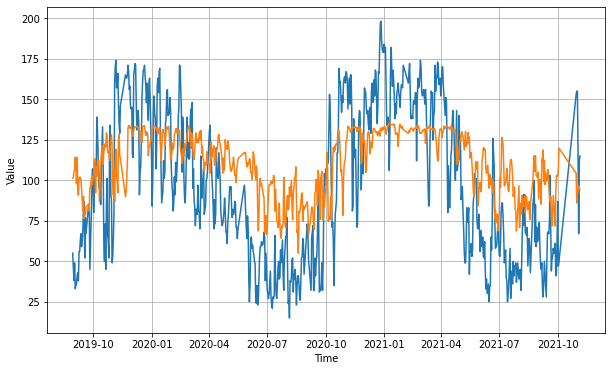

plt.figure(figsize=(10, 6))

plot_series(time_valid, x_valid)

plot_series(time_valid, results)

tf.keras.metrics.mean_absolute_error(x_valid, results).numpy()

15.45758

This model is currently deployed in Azure

Deep neural network¶

dataset = windowed_dataset(x_train, window_size, batch_size, shuffle_buffer_size)

model = tf.keras.models.Sequential([

tf.keras.layers.Dense(14, input_shape=[window_size], activation="relu"),

tf.keras.layers.Dense(14, activation="relu"),

tf.keras.layers.Dense(1)

])

model.compile(loss="mse", optimizer=tf.keras.optimizers.SGD(learning_rate=1e-6, momentum=0.9), metrics=["mae"])

model.fit(dataset,epochs=100)

Epoch 1/100

1570/1570 [==============================] - 1s 730us/step - loss: 845.5267 - mae: 21.9859

Epoch 2/100

1570/1570 [==============================] - 1s 724us/step - loss: 1019.4009 - mae: 24.1638

Epoch 3/100

1570/1570 [==============================] - 1s 717us/step - loss: 722.2228 - mae: 20.5732

Epoch 4/100

1570/1570 [==============================] - 1s 718us/step - loss: 701.0610 - mae: 20.4133

Epoch 5/100

1570/1570 [==============================] - 1s 716us/step - loss: 677.8721 - mae: 19.7713

Epoch 6/100

1570/1570 [==============================] - 1s 689us/step - loss: 897.4562 - mae: 22.3025

Epoch 7/100

1570/1570 [==============================] - 1s 698us/step - loss: 739.0591 - mae: 20.7214

Epoch 8/100

1570/1570 [==============================] - 1s 688us/step - loss: 659.6113 - mae: 19.5167

Epoch 9/100

1570/1570 [==============================] - 1s 749us/step - loss: 774.5299 - mae: 21.2334

Epoch 10/100

1570/1570 [==============================] - 1s 725us/step - loss: 732.1213 - mae: 20.3679

Epoch 11/100

1570/1570 [==============================] - 1s 727us/step - loss: 600.1824 - mae: 18.8780

Epoch 12/100

1570/1570 [==============================] - 1s 661us/step - loss: 717.2169 - mae: 20.2712

Epoch 13/100

1570/1570 [==============================] - 1s 685us/step - loss: 627.1095 - mae: 19.3082

Epoch 14/100

1570/1570 [==============================] - 1s 686us/step - loss: 713.9377 - mae: 19.4658

Epoch 15/100

1570/1570 [==============================] - 1s 696us/step - loss: 671.5330 - mae: 19.8928

Epoch 16/100

1570/1570 [==============================] - 1s 689us/step - loss: 809.9895 - mae: 21.1933

Epoch 17/100

1570/1570 [==============================] - 1s 697us/step - loss: 626.8463 - mae: 18.9511

Epoch 18/100

1570/1570 [==============================] - 1s 690us/step - loss: 664.7856 - mae: 19.5640

Epoch 19/100

1570/1570 [==============================] - 1s 702us/step - loss: 695.1313 - mae: 20.3170

Epoch 20/100

1570/1570 [==============================] - 1s 685us/step - loss: 844.7645 - mae: 21.5548

Epoch 21/100

1570/1570 [==============================] - 1s 749us/step - loss: 750.3210 - mae: 20.9530

Epoch 22/100

1570/1570 [==============================] - 1s 682us/step - loss: 631.6428 - mae: 19.1385

Epoch 23/100

1570/1570 [==============================] - 1s 694us/step - loss: 721.4250 - mae: 20.0112

Epoch 24/100

1570/1570 [==============================] - 1s 687us/step - loss: 879.2552 - mae: 22.0182

Epoch 25/100

1570/1570 [==============================] - 1s 684us/step - loss: 827.2421 - mae: 21.6464

Epoch 26/100

1570/1570 [==============================] - 1s 700us/step - loss: 701.4094 - mae: 20.2091

Epoch 27/100

1570/1570 [==============================] - 1s 691us/step - loss: 695.8590 - mae: 20.0878

Epoch 28/100

1570/1570 [==============================] - 1s 692us/step - loss: 703.5710 - mae: 20.0760

Epoch 29/100

1570/1570 [==============================] - 1s 699us/step - loss: 632.8063 - mae: 19.4138

Epoch 30/100

1570/1570 [==============================] - 1s 701us/step - loss: 653.7804 - mae: 19.7783

Epoch 31/100

1570/1570 [==============================] - 1s 691us/step - loss: 1016.0288 - mae: 23.3376

Epoch 32/100

1570/1570 [==============================] - 1s 701us/step - loss: 695.7961 - mae: 20.5422

Epoch 33/100

1570/1570 [==============================] - 1s 719us/step - loss: 642.6339 - mae: 19.6469

Epoch 34/100

1570/1570 [==============================] - 1s 753us/step - loss: 604.4878 - mae: 18.8055

Epoch 35/100

1570/1570 [==============================] - 1s 696us/step - loss: 669.7369 - mae: 20.0942

Epoch 36/100

1570/1570 [==============================] - 1s 697us/step - loss: 778.4235 - mae: 20.4621

Epoch 37/100

1570/1570 [==============================] - 1s 700us/step - loss: 697.3196 - mae: 19.9764

Epoch 38/100

1570/1570 [==============================] - 1s 697us/step - loss: 600.9893 - mae: 18.5254

Epoch 39/100

1570/1570 [==============================] - 1s 704us/step - loss: 710.3656 - mae: 20.0062

Epoch 40/100

1570/1570 [==============================] - 1s 697us/step - loss: 704.0516 - mae: 20.1501

Epoch 41/100

1570/1570 [==============================] - 1s 695us/step - loss: 733.9904 - mae: 20.4308

Epoch 42/100

1570/1570 [==============================] - 1s 697us/step - loss: 555.9091 - mae: 18.1039

Epoch 43/100

1570/1570 [==============================] - 1s 683us/step - loss: 647.9240 - mae: 18.9008

Epoch 44/100

1570/1570 [==============================] - 1s 708us/step - loss: 696.8072 - mae: 20.2532

Epoch 45/100

1570/1570 [==============================] - 1s 721us/step - loss: 639.6487 - mae: 19.3308

Epoch 46/100

1570/1570 [==============================] - 1s 726us/step - loss: 554.9702 - mae: 17.9829

Epoch 47/100

1570/1570 [==============================] - 1s 725us/step - loss: 605.5833 - mae: 18.8098

Epoch 48/100

1570/1570 [==============================] - 1s 745us/step - loss: 823.3652 - mae: 22.1876

Epoch 49/100

1570/1570 [==============================] - 1s 745us/step - loss: 700.0248 - mae: 20.3396

Epoch 50/100

1570/1570 [==============================] - 1s 763us/step - loss: 707.9413 - mae: 20.2279

Epoch 51/100

1570/1570 [==============================] - 1s 757us/step - loss: 700.5992 - mae: 19.8542

Epoch 52/100

1570/1570 [==============================] - 1s 702us/step - loss: 645.3628 - mae: 19.1472

Epoch 53/100

1570/1570 [==============================] - 1s 701us/step - loss: 636.1317 - mae: 19.1446

Epoch 54/100

1570/1570 [==============================] - 1s 778us/step - loss: 667.7475 - mae: 19.5081

Epoch 55/100

1570/1570 [==============================] - 1s 750us/step - loss: 676.5157 - mae: 19.5577

Epoch 56/100

1570/1570 [==============================] - 1s 709us/step - loss: 929.1287 - mae: 22.7297

Epoch 57/100

1570/1570 [==============================] - 1s 741us/step - loss: 804.6200 - mae: 21.4121

Epoch 58/100

1570/1570 [==============================] - 1s 750us/step - loss: 725.5538 - mae: 20.5640

Epoch 59/100

1570/1570 [==============================] - 1s 726us/step - loss: 732.9226 - mae: 20.6287

Epoch 60/100

1570/1570 [==============================] - 1s 774us/step - loss: 763.7737 - mae: 20.9384

Epoch 61/100

1570/1570 [==============================] - 1s 771us/step - loss: 598.0344 - mae: 18.4678

Epoch 62/100

1570/1570 [==============================] - 1s 731us/step - loss: 783.2723 - mae: 21.0092

Epoch 63/100

1570/1570 [==============================] - 1s 689us/step - loss: 613.8795 - mae: 18.4673

Epoch 64/100

1570/1570 [==============================] - 1s 689us/step - loss: 891.0996 - mae: 21.7655

Epoch 65/100

1570/1570 [==============================] - 1s 683us/step - loss: 680.8638 - mae: 19.8157

Epoch 66/100

1570/1570 [==============================] - 1s 705us/step - loss: 660.6561 - mae: 19.6660

Epoch 67/100

1570/1570 [==============================] - 1s 698us/step - loss: 768.7937 - mae: 21.4989

Epoch 68/100

1570/1570 [==============================] - 1s 688us/step - loss: 667.2055 - mae: 19.1970

Epoch 69/100

1570/1570 [==============================] - 1s 706us/step - loss: 735.8928 - mae: 20.3921

Epoch 70/100

1570/1570 [==============================] - 1s 696us/step - loss: 843.5947 - mae: 21.6246

Epoch 71/100

1570/1570 [==============================] - 1s 676us/step - loss: 612.9269 - mae: 18.9968

Epoch 72/100

1570/1570 [==============================] - 1s 745us/step - loss: 631.1614 - mae: 18.9914

Epoch 73/100

1570/1570 [==============================] - 1s 693us/step - loss: 713.9399 - mae: 20.2510

Epoch 74/100

1570/1570 [==============================] - 1s 635us/step - loss: 628.4302 - mae: 19.2150

Epoch 75/100

1570/1570 [==============================] - 1s 651us/step - loss: 740.0641 - mae: 20.8704

Epoch 76/100

1570/1570 [==============================] - 1s 622us/step - loss: 634.4455 - mae: 19.2745

Epoch 77/100

1570/1570 [==============================] - 1s 609us/step - loss: 716.5535 - mae: 20.6797

Epoch 78/100

1570/1570 [==============================] - 1s 639us/step - loss: 735.1273 - mae: 20.3919

Epoch 79/100

1570/1570 [==============================] - 1s 657us/step - loss: 630.1802 - mae: 19.2159

Epoch 80/100

1570/1570 [==============================] - 1s 616us/step - loss: 597.2530 - mae: 18.6527

Epoch 81/100

1570/1570 [==============================] - 1s 684us/step - loss: 662.5781 - mae: 19.5194

Epoch 82/100

1570/1570 [==============================] - 1s 699us/step - loss: 631.2231 - mae: 19.0265

Epoch 83/100

1570/1570 [==============================] - 1s 638us/step - loss: 719.9138 - mae: 20.2006

Epoch 84/100

1570/1570 [==============================] - 1s 613us/step - loss: 578.8290 - mae: 18.5974

Epoch 85/100

1570/1570 [==============================] - 1s 630us/step - loss: 833.9090 - mae: 21.5101

Epoch 86/100

1570/1570 [==============================] - 1s 689us/step - loss: 724.4709 - mae: 20.2260

Epoch 87/100

1570/1570 [==============================] - 1s 650us/step - loss: 659.7275 - mae: 19.9460

Epoch 88/100

1570/1570 [==============================] - 1s 655us/step - loss: 710.6397 - mae: 20.5307

Epoch 89/100

1570/1570 [==============================] - 1s 641us/step - loss: 703.9041 - mae: 20.2339

Epoch 90/100

1570/1570 [==============================] - 1s 641us/step - loss: 747.5262 - mae: 20.9598

Epoch 91/100

1570/1570 [==============================] - 1s 671us/step - loss: 803.8232 - mae: 21.4400

Epoch 92/100

1570/1570 [==============================] - 1s 637us/step - loss: 643.2718 - mae: 19.1185

Epoch 93/100

1570/1570 [==============================] - 1s 656us/step - loss: 735.5874 - mae: 20.7587

Epoch 94/100

1570/1570 [==============================] - 1s 646us/step - loss: 659.4421 - mae: 19.6404

Epoch 95/100

1570/1570 [==============================] - 1s 699us/step - loss: 632.8841 - mae: 19.2961

Epoch 96/100

1570/1570 [==============================] - 1s 702us/step - loss: 713.7954 - mae: 20.0682

Epoch 97/100

1570/1570 [==============================] - 1s 623us/step - loss: 650.3159 - mae: 19.1298

Epoch 98/100

1570/1570 [==============================] - 1s 642us/step - loss: 786.0706 - mae: 21.4033

Epoch 99/100

1570/1570 [==============================] - 1s 625us/step - loss: 687.3713 - mae: 19.8312

Epoch 100/100

1570/1570 [==============================] - 1s 683us/step - loss: 692.3904 - mae: 20.3091

forecast = []

for time in range(len(data.pm25) - window_size):

forecast.append(model.predict(np.array(data.pm25[time:time + window_size])[np.newaxis]))

forecast = forecast[split_time-window_size:]

results = np.array(forecast)[:, 0, 0]

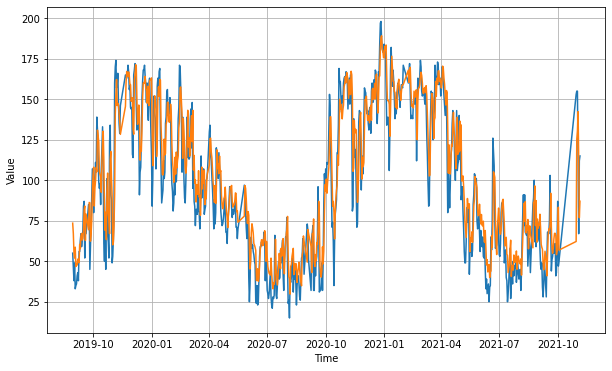

plt.figure(figsize=(10, 6))

plot_series(time_valid, x_valid)

plot_series(time_valid, results)

tf.keras.metrics.mean_absolute_error(x_valid, results).numpy()

13.240775

model.save('pm25_DL_model')

INFO:tensorflow:Assets written to: pm25_DL_model\assets

model = tf.keras.models.load_model('pm25_DL_model')

model.predict(np.array(data.pm25[1:15])[np.newaxis])

array([[163.71252]], dtype=float32)

This model is currently deployed as "Deep learning model" in Git actions

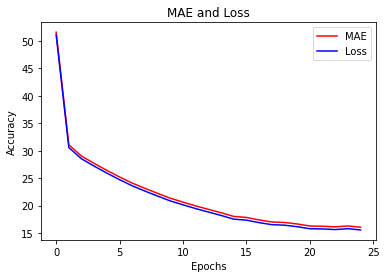

RNN¶

tf.keras.backend.clear_session()

tf.random.set_seed(51)

np.random.seed(51)

train_set = windowed_dataset(x_train, window_size, batch_size, shuffle_buffer_size)

model = tf.keras.models.Sequential([

tf.keras.layers.Lambda(lambda x: tf.expand_dims(x, axis=-1),

input_shape=[None]),

tf.keras.layers.SimpleRNN(14, return_sequences=True),

tf.keras.layers.SimpleRNN(14, return_sequences=True),

tf.keras.layers.Dense(1),

tf.keras.layers.Lambda(lambda x: x * 200.0)

])

lr_schedule = tf.keras.callbacks.LearningRateScheduler(

lambda epoch: 1e-8 * 10**(epoch / 20))

optimizer = tf.keras.optimizers.SGD(learning_rate=1e-8, momentum=0.9)

model.compile(loss=tf.keras.losses.Huber(),

optimizer=optimizer,

metrics=["mae"])

history = model.fit(train_set, epochs=25, callbacks=[lr_schedule])

Epoch 1/25

1570/1570 [==============================] - 7s 3ms/step - loss: 255.5130 - mae: 256.0130

Epoch 2/25

1570/1570 [==============================] - 6s 3ms/step - loss: 136.1440 - mae: 136.6439

Epoch 3/25

1570/1570 [==============================] - 5s 3ms/step - loss: 44.4807 - mae: 44.9783

Epoch 4/25

1570/1570 [==============================] - 5s 3ms/step - loss: 36.5102 - mae: 37.0077

Epoch 5/25

1570/1570 [==============================] - 5s 3ms/step - loss: 36.1142 - mae: 36.6120

Epoch 6/25

1570/1570 [==============================] - 5s 3ms/step - loss: 35.6905 - mae: 36.1887

Epoch 7/25

1570/1570 [==============================] - 5s 3ms/step - loss: 35.2254 - mae: 35.7231

Epoch 8/25

1570/1570 [==============================] - 5s 3ms/step - loss: 34.7579 - mae: 35.2558

Epoch 9/25

1570/1570 [==============================] - 5s 3ms/step - loss: 34.1971 - mae: 34.6951

Epoch 10/25

1570/1570 [==============================] - 5s 3ms/step - loss: 33.6498 - mae: 34.1480

Epoch 11/25

1570/1570 [==============================] - 5s 3ms/step - loss: 32.9927 - mae: 33.4904

Epoch 12/25

1570/1570 [==============================] - 5s 3ms/step - loss: 32.3265 - mae: 32.8238

Epoch 13/25

1570/1570 [==============================] - 5s 3ms/step - loss: 31.7288 - mae: 32.2260

Epoch 14/25

1570/1570 [==============================] - 5s 3ms/step - loss: 31.1841 - mae: 31.6818

Epoch 15/25

1570/1570 [==============================] - 5s 3ms/step - loss: 30.7113 - mae: 31.2086

Epoch 16/25

1570/1570 [==============================] - 5s 3ms/step - loss: 30.3737 - mae: 30.8711

Epoch 17/25

1570/1570 [==============================] - 5s 3ms/step - loss: 30.1141 - mae: 30.6113

Epoch 18/25

1570/1570 [==============================] - 5s 3ms/step - loss: 29.7798 - mae: 30.2774

Epoch 19/25

1570/1570 [==============================] - 5s 3ms/step - loss: 29.6268 - mae: 30.1236

Epoch 20/25

1570/1570 [==============================] - 5s 3ms/step - loss: 29.4335 - mae: 29.9309

Epoch 21/25

1570/1570 [==============================] - 5s 3ms/step - loss: 29.3501 - mae: 29.8474

Epoch 22/25

1570/1570 [==============================] - 5s 3ms/step - loss: 30.9055 - mae: 31.4033

Epoch 23/25

1570/1570 [==============================] - 5s 3ms/step - loss: 29.6605 - mae: 30.1580

Epoch 24/25

1570/1570 [==============================] - 5s 3ms/step - loss: 29.5224 - mae: 30.0199

Epoch 25/25

1570/1570 [==============================] - 5s 3ms/step - loss: 28.9797 - mae: 29.4764

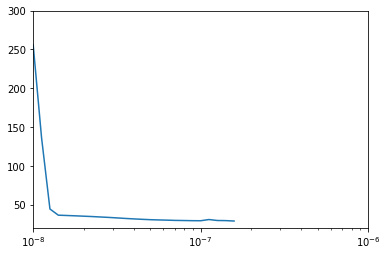

plt.semilogx(history.history["lr"], history.history["loss"])

plt.axis([1e-8, 1e-6, 20, 300])

forecast = []

for time in range(len(data.pm25) - window_size):

forecast.append(model.predict(np.array(data.pm25[time:time + window_size])[np.newaxis]))

forecast = forecast[split_time-window_size:]

results = np.array(forecast)[:, 0, 0]

plt.figure(figsize=(10, 6))

plot_series(time_valid, x_valid)

plot_series(time_valid, results)

tf.keras.metrics.mean_absolute_error(x_valid, results).numpy()

array([39.826717, 39.908363, 40.442093, ..., 39.340984, 39.340984], dtype=float32)

import matplotlib.image as mpimg

import matplotlib.pyplot as plt

#-----------------------------------------------------------

# Retrieve a list of list results on training and test data

# sets for each training epoch

#-----------------------------------------------------------

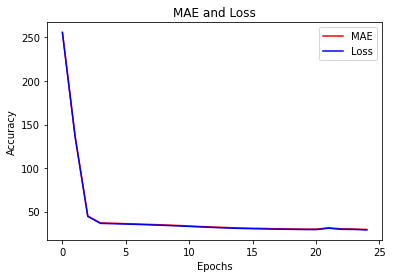

mae=history.history['mae']

loss=history.history['loss']

epochs=range(len(loss)) # Get number of epochs

#------------------------------------------------

# Plot MAE and Loss

#------------------------------------------------

plt.plot(epochs, mae, 'r')

plt.plot(epochs, loss, 'b')

plt.title('MAE and Loss')

plt.xlabel("Epochs")

plt.ylabel("Accuracy")

plt.legend(["MAE", "Loss"])

plt.figure()

epochs_zoom = epochs[200:]

mae_zoom = mae[200:]

loss_zoom = loss[200:]

#------------------------------------------------

# Plot Zoomed MAE and Loss

#------------------------------------------------

plt.plot(epochs_zoom, mae_zoom, 'r')

plt.plot(epochs_zoom, loss_zoom, 'b')

plt.title('MAE and Loss')

plt.xlabel("Epochs")

plt.ylabel("Accuracy")

plt.legend(["MAE", "Loss"])

plt.figure()

LSTM¶

def model_forecast(model, series, window_size):

ds = tf.data.Dataset.from_tensor_slices(series)

ds = ds.window(window_size, shift=1, drop_remainder=True)

ds = ds.flat_map(lambda w: w.batch(window_size))

ds = ds.batch(32).prefetch(1)

forecast = model.predict(ds)

return forecast

tf.keras.backend.clear_session()

tf.random.set_seed(51)

np.random.seed(51)

x_train_LSTM = tf.expand_dims(x_train, axis=-1)

train_set = windowed_dataset(x_train_LSTM, window_size, batch_size, shuffle_buffer_size)

train_set

model = tf.keras.models.Sequential([

tf.keras.layers.Conv1D(filters=32, kernel_size=5,

strides=1, padding="causal",

activation="relu",

input_shape=[None, 1]),

tf.keras.layers.Bidirectional(tf.keras.layers.LSTM(32, return_sequences=True)),

tf.keras.layers.Bidirectional(tf.keras.layers.LSTM(32, return_sequences=True)),

tf.keras.layers.Dense(1),

tf.keras.layers.Lambda(lambda x: x * 200)

])

lr_schedule = tf.keras.callbacks.LearningRateScheduler(

lambda epoch: 1e-8 * 10**(epoch / 20))

optimizer = tf.keras.optimizers.SGD(learning_rate=1e-8, momentum=0.9)

model.compile(loss=tf.keras.losses.Huber(),

optimizer=optimizer,

metrics=["mae"])

history = model.fit(train_set, epochs=25, callbacks=[lr_schedule])

Epoch 1/25

1570/1570 [==============================] - 27s 12ms/step - loss: 51.0839 - mae: 51.5817

Epoch 2/25

1570/1570 [==============================] - 21s 13ms/step - loss: 30.5634 - mae: 31.0600 0s - loss: 30.5700 - mae: 31.06

Epoch 3/25

1570/1570 [==============================] - 20s 13ms/step - loss: 28.5261 - mae: 29.0220

Epoch 4/25

1570/1570 [==============================] - 23s 14ms/step - loss: 27.1795 - mae: 27.6754

Epoch 5/25

1570/1570 [==============================] - 21s 13ms/step - loss: 25.9100 - mae: 26.4058

Epoch 6/25

1570/1570 [==============================] - 21s 13ms/step - loss: 24.7144 - mae: 25.2104

Epoch 7/25

1570/1570 [==============================] - 22s 14ms/step - loss: 23.5953 - mae: 24.0904

Epoch 8/25

1570/1570 [==============================] - 21s 13ms/step - loss: 22.6447 - mae: 23.1403

Epoch 9/25

1570/1570 [==============================] - 22s 14ms/step - loss: 21.7317 - mae: 22.2269

Epoch 10/25

1570/1570 [==============================] - 22s 14ms/step - loss: 20.8462 - mae: 21.3408

Epoch 11/25

1570/1570 [==============================] - 22s 14ms/step - loss: 20.1405 - mae: 20.6347

Epoch 12/25

1570/1570 [==============================] - 22s 14ms/step - loss: 19.4537 - mae: 19.9481

Epoch 13/25

1570/1570 [==============================] - 22s 14ms/step - loss: 18.8216 - mae: 19.3158

Epoch 14/25

1570/1570 [==============================] - 22s 14ms/step - loss: 18.2066 - mae: 18.7004

Epoch 15/25

1570/1570 [==============================] - 22s 14ms/step - loss: 17.5309 - mae: 18.0234

Epoch 16/25

1570/1570 [==============================] - 22s 14ms/step - loss: 17.3460 - mae: 17.8395

Epoch 17/25

1570/1570 [==============================] - 22s 14ms/step - loss: 16.8951 - mae: 17.3882

Epoch 18/25

1570/1570 [==============================] - 23s 15ms/step - loss: 16.5255 - mae: 17.0192

Epoch 19/25

1570/1570 [==============================] - 22s 14ms/step - loss: 16.4288 - mae: 16.9225

Epoch 20/25

1570/1570 [==============================] - 22s 14ms/step - loss: 16.1572 - mae: 16.6509

Epoch 21/25

1570/1570 [==============================] - 22s 14ms/step - loss: 15.7839 - mae: 16.2775

Epoch 22/25

1570/1570 [==============================] - 22s 14ms/step - loss: 15.7438 - mae: 16.2366

Epoch 23/25

1570/1570 [==============================] - 23s 15ms/step - loss: 15.6320 - mae: 16.1244

Epoch 24/25

1570/1570 [==============================] - 23s 14ms/step - loss: 15.7910 - mae: 16.2841

Epoch 25/25

1570/1570 [==============================] - 22s 14ms/step - loss: 15.5656 - mae: 16.0587

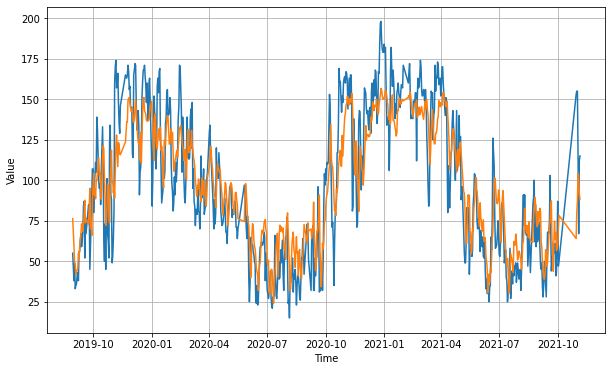

rnn_forecast = model_forecast(model, np.array(data.pm25)[..., np.newaxis], window_size)

rnn_forecast = rnn_forecast[split_time - window_size:-1, -1, 0]

plt.figure(figsize=(10, 6))

plot_series(time_valid, x_valid)

plot_series(time_valid, rnn_forecast)

tf.keras.metrics.mean_absolute_error(x_valid, rnn_forecast).numpy()

17.472342

import matplotlib.image as mpimg

import matplotlib.pyplot as plt

#-----------------------------------------------------------

# Retrieve a list of list results on training and test data

# sets for each training epoch

#-----------------------------------------------------------

mae=history.history['mae']

loss=history.history['loss']

epochs=range(len(loss)) # Get number of epochs

#------------------------------------------------

# Plot MAE and Loss

#------------------------------------------------

plt.plot(epochs, mae, 'r')

plt.plot(epochs, loss, 'b')

plt.title('MAE and Loss')

plt.xlabel("Epochs")

plt.ylabel("Accuracy")

plt.legend(["MAE", "Loss"])

plt.figure()

epochs_zoom = epochs[200:]

mae_zoom = mae[200:]

loss_zoom = loss[200:]

#------------------------------------------------

# Plot Zoomed MAE and Loss

#------------------------------------------------

plt.plot(epochs_zoom, mae_zoom, 'r')

plt.plot(epochs_zoom, loss_zoom, 'b')

plt.title('MAE and Loss')

plt.xlabel("Epochs")

plt.ylabel("Accuracy")

plt.legend(["MAE", "Loss"])

plt.figure()